Enhance your RAG pipeline in PostgreSQL by incorporating facets and disciplined prompt design for more precise and relevant document retrieval.

In this blog post, we return to the retrieval pipeline, but this time we’ll make it smarter by including facets in the search. By filtering first on structured metadata before running semantic similarity, we can focus retrieval on the exact subset of documentation that matters. This hybrid approach improves precision, reduces noise, and sets us up to hand cleaner, more relevant context to the LLM.

We’ll also go deeper into prompt design, how to structure the context and user query so that the model consistently stays grounded in the retrieved information and avoids drifting into hallucination.

Why facets matter in retrieval

Embeddings are powerful, but without guardrails they can cast too wide a net. A query like “How do I configure replication in version 17 on Linux?” doesn’t just need semantic similarity, it needs to ignore answers that apply to Windows or to older releases. That’s exactly what facets give us.

Embeddings are powerful, but without guardrails they can cast too wide a net. A query like “How do I configure replication in version 17 on Linux?” doesn’t just need semantic similarity, it needs to ignore answers that apply to Windows or to older releases. That’s exactly what facets give us.

Because we stored structured metadata alongside each chunk, we can now write hybrid queries in PostgreSQL that filter on facets before applying vector similarity. This narrows the candidate set down to only the chunks that match the context we know is relevant.

Here’s a simplified query that combines both:

FROM fep_manual_chunks

WHERE version = '17'

AND os = 'linux'

AND component = 'administration'

ORDER BY embedding <#> ai.embed('text-embedding-3-small', 'configure admin tool’)

LIMIT 6;

This query only considers version 17, Linux, administration docs, then rank the results semantically by closeness to the user query. The effect is that we hand the LLM a much cleaner context set, while also improving speed because PostgreSQL has far fewer rows to scan.

Building context for the LLM

Once we’ve narrowed down to a handful of relevant chunks, the next step is to assemble them into a prompt for the model. This part is deceptively simple but makes a big difference to the quality of the answers.

The key is consistency. The model should always receive the context in a predictable format. One straightforward way is to number each chunk and provide both its heading and body text. That way, the model can ‘see’ the structure of the documentation even before it generates an answer.

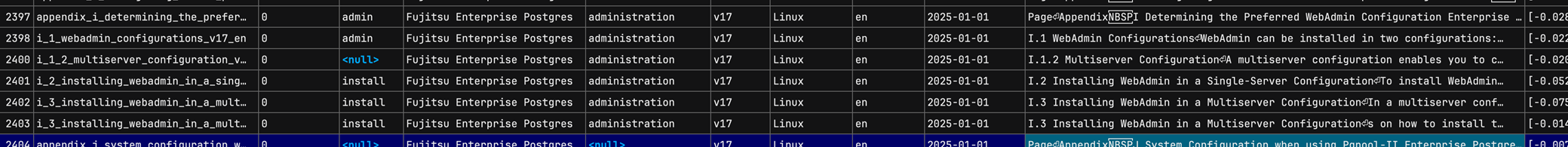

For example, given the following slice of the fep_manual_chunks table filtered to V17 Linux administration:

An effective prompt would be:

You are a technical assistant for Fujitsu Enterprise Postgres documentation.

Use only the provided context to answer the question.

If the context does not contain the answer, reply 'The documentation does not specify.'

Context:

[1] I.1 WebAdmin Configurations WebAdmin can be installed in two…

[2] I.2 Installing WebAdmin in a Single-Server Configuration…

[3] I.3 Installing WebAdmin in a Multi-server Configuration…

Question:

How do I configure a multi-server administration tool on version 17 on Linux?

This isn’t flashy prompt engineering, it’s just disciplined formatting. The chunks are clear, scoped, and easy for the model to work with.

Prompt discipline vs. prompt engineering

There’s a bit of hype around ‘prompt engineering’, but in RAG systems, it’s less about clever phrasing and more about discipline. The retrieval side (facets plus semantic search) does the heavy lifting. The prompt’s job is simply to keep the model inside the rails.

There’s a bit of hype around ‘prompt engineering’, but in RAG systems, it’s less about clever phrasing and more about discipline. The retrieval side (facets plus semantic search) does the heavy lifting. The prompt’s job is simply to keep the model inside the rails.

That said, you can experiment with variations:

- Role framing: Framing the model as a documentation assistant vs. as a senior DBA helping a junior engineer can change tone and depth.

- Answer format: Free text for conversational responses, bullet lists for quick steps, or JSON when the output needs to be machine-consumable.

- Fallback handling: Reinforcing that the model should explicitly say “The documentation does not specify” when the answer isn’t present.

These tweaks don’t replace good retrieval, but they do make the system more usable in different contexts.

With facets filtering the candidate set, embeddings ranking by semantic closeness, and prompts constraining the model, we now have a RAG pipeline that is far more reliable than the embedding-only version.

The result is not just better answers, but answers you can trust, grounded in the exact slice of documentation that’s relevant to the user’s environment.

Coming up next

In the next post, we’ll push beyond static retrieval and prompts into Agents and agentized RAG. Instead of us hard coding the retrieval flow, we’ll explore how an LLM itself can decide which queries to run, how to refine them, and how to combine multiple passes of retrieval. This is where the line between retrieval and reasoning starts to blur, and where the system becomes more autonomous in answering complex questions.

In the next post, we’ll push beyond static retrieval and prompts into Agents and agentized RAG. Instead of us hard coding the retrieval flow, we’ll explore how an LLM itself can decide which queries to run, how to refine them, and how to combine multiple passes of retrieval. This is where the line between retrieval and reasoning starts to blur, and where the system becomes more autonomous in answering complex questions.

Other blog posts in this series

- Why Enterprise AI starts with the database - Not the data swamp

- Understanding similarity vs distance in PostgreSQL vector search

- What is usually embedded in vector search: Sentences, words, or tokens?

- How to store and query embeddings in PostgreSQL without losing your mind

- Embedding content in PostgreSQL using Python: Combining markup chunking and token-aware chunking

- From embeddings to answers: How to use vector embeddings in a RAG pipeline with PostgreSQL and an LLM

- Improving RAG in PostgreSQL: From basic retrieval to smarter context