Learn how to efficiently embed content into PostgreSQL using Python, focusing on markup chunking and token-aware chunking for optimal semantic search and retrieval.

Chunking content for better embeddings in PostgreSQL

Embeddings are only as useful as the input they’re based on, and the difference between an effective system and an unreliable one often comes down to whether the input was chunked appropriately.

Embeddings are only as useful as the input they’re based on, and the difference between an effective system and an unreliable one often comes down to whether the input was chunked appropriately.

Modern embedding models, like OpenAI’s text-embedding-3-small, process text in terms of tokens, not characters or words. These tokens are model-specific and reflect how the language model internally segments input. Each model also enforces a hard limit on the number of tokens per request, often in the thousands, but finite, nonetheless. If you blindly send large blocks of text for embedding, you’ll either hit this limit and trigger errors, or end up with truncated embeddings that lose important information. Worse, if your chunks are too small or misaligned, you’ll embed incomplete or incoherent thoughts, weakening semantic search and degrading the retrieval stage in a RAG pipeline.

Let’s look at the use case of working with technical manuals and embedding content into PostgreSQL for Retrieval-Augmented Generation (RAG). This requires a careful balance between preserving the document's semantic structure and ensuring the chunk sizes are compatible with the token limits of modern language models. If chunk sizes exceed the model's window, the LLM may not process the input efficiently or may even truncate critical context, leading to incomplete or inaccurate responses. On the other hand, blindly splitting content to fit token limits risks severing important logical units of information, such as configuration steps, procedures, or descriptive sections.

Separation of concerns

While PostgreSQL can handle many parts of the embedding workflow thanks to extensions like pgvector and pgai, token-aware chunking and embedding preparation are best handled in Python, outside the database. There are several reasons for this.

|

First, tokenization logic is tightly coupled to the model you’re embedding with, and tools like tiktoken (used for OpenAI models) or the Hugging Face tokeniser classes aren’t readily available inside PostgreSQL. While you could technically build and expose tokenisation functionality inside PL/Python, it introduces complexity and maintenance burdens. These libraries depend on native Python packages and language model specific configurations that don't translate cleanly into the constrained, sandboxed environment of PostgreSQL’s in-database procedural languages. |

|

Second, managing batch requests and retries for external embedding APIs is more natural and robust when done in Python. These APIs can fail due to rate limits, network errors, or token overflows. Python allows you to gracefully handle these errors, apply retries, logging, and request throttling. Doing this purely in SQL or PL/Python would be awkward.. |

|

Third, embedding typically happens as a preprocessing step. You’re often loading large documents, cleaning or normalising the content, breaking it into token-aligned segments, and embedding those for long-term storage, not on the fly during SQL queries. This preprocessing fits better into a Python ETL style script or notebook workflow. You can version and test your logic independently, then write clean records into your PostgreSQL tables.. |

By contrast, PostgreSQL is ideal for managing the resulting embeddings by storing them efficiently using pgvector, indexing them with approximate nearest neighbour search, joining them to metadata, and querying them in combination with traditional SQL filters. It’s where you use the embeddings, not where you necessarily generate them. Separating concerns in this way, using Python for controlled embedding and PostgreSQL for fast retrieval and analytics, gives you more flexibility, reliability, and transparency.

Demo breakdown

In this article, we'll build a practical demo that shows how to use python and PostgreSQL to:

- Chunk an HTML product manual by its markup structure to preserve its inherent logical organisation.

- Apply token-aware chunking to large sections to maintain LLM compatibility.

- Store both the chunks and embeddings in PostgreSQL using pgvector to enable fast and efficient similarity searches.

- Compare retrieval effectiveness between markup-based and token-based chunking to understand which provides more relevant and useful results.

Preparing the environment

Before we can process and embed our manual content, we need to set up the right environment. This involves selecting the appropriate tools for HTML parsing, token counting, and embedding generation.

For this project, we will use Python 3.13 because of its mature ecosystem and compatibility with modern libraries. We’ll use BeautifulSoup to parse and traverse the HTML structure, and tiktoken, OpenAI's tokeniser, to accurately measure token lengths according to specific LLM models. PostgreSQL with the pgvector extension will allow us to efficiently store and query vector embeddings.

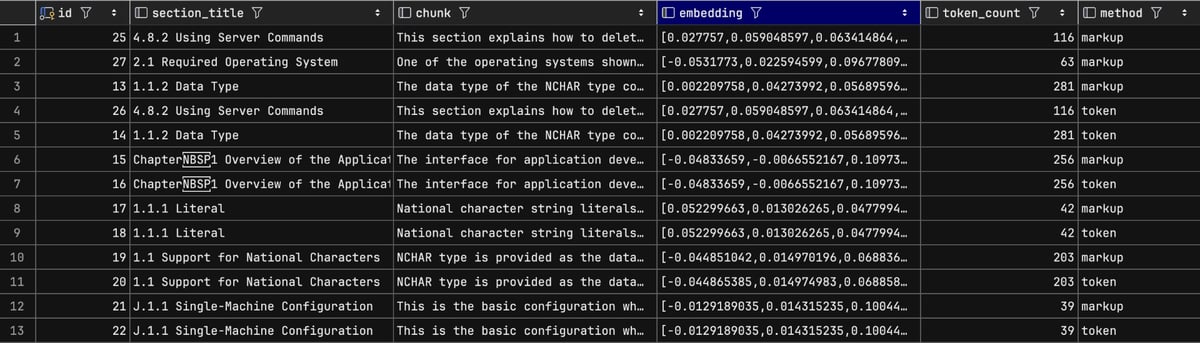

We will initially use the below table definition to store our chunking strategy for a single version of the manual, including both markup-based and token-aware chunking. As we evolve this approach in other articles, we will expand the schema to support multiple versions of the manual and experiment with alternative embedding models.

This will allow us to systematically compare how chunking methods and model choices influence retrieval accuracy and performance across different document versions and embedding strategies.

(

id serial primary key,

section_title text,

chunk text,

embedding vector(1536),

token_count integer,

method integer,

section_anchor text

);

This table definition ensures that each stored chunk retains its section title, text, token count, and the method used to create it, which will be important when comparing retrieval effectiveness.

Chunking by markup

Chunking by markup is the first step because it respects the structure of the document. Manuals are typically organised into a nested hierarchy using tags like <h1>, <h2>, <h3>, and <p>. These sections usually contain logically grouped information that makes sense to present as a whole. For example, a procedure or configuration guide under a specific header should not be arbitrarily split, as doing so could separate instructions from important context.

The following Python function parses the passed in HTML file, grouping content by header tags. This ensures that each chunk corresponds to a self-contained, meaningful unit from the manual.

def process_html_chunking(dir_path):

# Loop through HTML files in the specified directory

for filename in os.listdir(dir_path):

if filename.endswith(".html"):

file_path = os.path.join(dir_path, filename)

# Read the HTML file

with open(file_path, 'r', encoding='utf-8') as file:

html_content = file.read()

# Parse the HTML content

soup = BeautifulSoup(html_content, features:'html.parser')

chunks = []

current_chunk = ""

section_title = ""

for element in soup.find_all(['h1', 'h2', 'h3', 'p']):

if element.name in ['h1', 'h2', 'h3']:

# When we find a heading and have accumulated text, save the current chunk

if current_chunk.strip():

chunks.append({'title': section_title, 'text': current_chunk.strip()})

# Update the section title and reset the current chunk

section_title = element.get_text()

current_chunk = ""

else:

# For paragraph elements, add their text to the current chunk

current_chunk += element.get_text() + " "

# Add the last chunk if there's content

if current_chunk.strip():

chunks.append({'title': section_title, 'text': current_chunk.strip()})

This approach ensures that the document's hierarchy and logical flow are maintained in each chunk. It’s particularly useful when the manual contains multi-step procedures, as breaking these up incorrectly could make the information less useful or harder to follow.

Token-aware chunking

While markup chunking is ideal for preserving logical structure, some sections of technical manuals can still be too large to pass directly into an LLM due to token constraints. To address this, we introduce token-aware chunking as a secondary safeguard.

Token-aware chunking ensures that each text chunk remains within the maximum token limit of the embedding model. The goal here is to further split overly large sections into smaller, manageable pieces without exceeding the model’s capacity. We typically target chunks under 600 tokens to accommodate common model windows and to leave space for prompt context.

Here’s the Python function to count tokens using the `tiktoken` library, which accurately reflects how OpenAI models tokenise text.

def token_subchunk(section_text):

# Encode the text into tokens

enc = encoding_for_model("gpt-4o")

tokens = enc.encode(section_text)

chunks = []

start_idx = 0

# Iterate through the tokens, creating chunks of maximum MAX_TOKENS size

while start_idx < len(tokens):

# Calculate the end index for this chunk (not exceeding the token array length)

end_idx = min(start_idx + MAX_TOKENS, len(tokens))

# Convert the token subset back to text

text_chunk = enc.decode(tokens[start_idx:end_idx])

chunks.append(text_chunk)

# Move to the next chunk

start_idx = end_idx

return chunks

def process_html_chunking(dir_path):

…

# Process each markup chunk for both markup and token-based storage

for chunk in chunks:

# Store the original markup-based chunk with its embedding

store_embedding(chunk['text'], chunk['title'], method:'markup')

# Check if the chunk exceeds the token limit

if token_count(chunk['text']) > MAX_TOKENS:

# If too large, break it down into smaller token-based chunks

subchunks = token_subchunk(chunk['text'])

for subchunk in subchunks:

# Store each subchunk with same section title but marked as 'token' method

store_embedding(subchunk, chunk['title'], method:'token')

else:

# For small chunks, store them as-is with 'token' method for consistency

store_embedding(chunk['text'], chunk['title'], method:'token')

print(f"Processed and stored {len(chunks)} markup chunks and token subchunks.")

This approach allows us to fine-tune the chunk sizes dynamically based on the actual tokenisation patterns, ensuring that no chunk is too large to embed or retrieve effectively.

Embedding and storing the chunks

Once we have the appropriately sized chunks, the next step is to generate embeddings and store them in our PostgreSQL database. We will use OpenAI’s embedding model `text-embedding-3-small` to create vector representations of each chunk. These embeddings enable similarity search, which is the backbone of RAG systems.

Here’s the Python function to embed and store each chunk along with relevant metadata.

def store_embeddin(chunk_text, section_title, method):

# Generate embedding using text-embedding-3-small model

response = openai.embeddings.create(model='text-embedding-3-small', input=chunk_text)

embedding = response.data[0].embedding

# Insert the chunk, its embedding, and metadata into the database

cur.execute(query:'''

INSERT INTO production.manual_chunks (section_title, chunk, embedding, token_count, method)

VALUES (%s, %s, %s, %s, %s)

''', vars:(section_title, chunk_text, embedding, token_count(chunk_text), method))

conn.commit() # Commit the transaction to save changes

We iterate through our chunks, applying token-aware sub-chunking where necessary. Chunks that fit within the token limit are stored as-is, while those that exceed the limit are further divided before storage.

This step ensures that our database contains a combination of semantically rich and token-compliant chunks, ready for efficient similarity search.

We can execute the program with the following, which will embed all of the HTML files in the specified folder.

The SQL definition for the table can be found here: manual_chunks.sql and the full Python code can be found here: EmbedHTMLManual.py (you will need to put your own DB connection string and API key in, in addition to installing the pre-requisites and packages).

The output from our program gives us an indication of how many chunks and subchunks each HTML file produces.

Processed and stored 1 markup chunks and token subchunks.

Processed and stored 1 markup chunks and token subchunks.

Processed and stored 2 markup chunks and token subchunks.

Processed and stored 1 markup chunks and token subchunks.

Processed and stored 1 markup chunks and token subchunks.

Below is a snippet showing both markup and token method embeddings.

Examining retrieval effectiveness

With our database populated, we can now test how well this chunking method supports retrieval. We use a vector similarity search to retrieve the most relevant chunks based on a query embedding.

Because of how the python3u implementation works in PostgreSQL, we can’t use Python virtual environments in the usual way. There are also some challenges with managing Python package paths.

My approach is to install libraries, like the openai Python package, into a separate virtual environment, then manually add the path to that environment inside a PostgreSQL function.

I wrote this function to embed the query string directly within SQL. If you prefer, you can also use the function with the same name that’s provided in the pgai extension, it’s already built for this purpose and can simplify your setup.

RETURNS vector

LANGUAGE plpython3u

AS $$

import sys

# Add the virtual environment path

sys.path.append('/Users/garyevans/venv/lib/python3.13/site-packages')

from openai import OpenAI

client = OpenAI(api_key='**************************************************')

try:

response = client.embeddings.create(

model=model,

input=input_text

)

embedding = response.data[0].embedding

return embedding

except Exception as e:

plpy.warning(f"OpenAI API error: {str(e)}")

return None

$$;

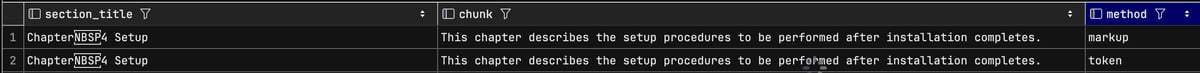

Below is what a typical query might look like using the customer written function:

FROM production.manual_chunks

ORDER BY embedding <=> openai_embed('text-embedding-3-small', 'setup up of software before install')

LIMIT 2;

As you can see, the results of just performing a semantic search on our embeddings was a little underwhelming. For example, when I searched for "setup of software before install", the top result returned was a section titled "This chapter describes the setup procedures to be performed after installation completes." Technically, the model found something linguistically similar, but semantically it completely missed the point, I was asking about pre-installation steps, not what happens after.

This is a good reminder that smaller embedding models like text-embedding-3-small are designed for speed and low cost, but they often lack the depth required for nuanced, domain-specific searches. Manuals use structured language and subtle distinctions in procedural steps that small models struggle to pick up. A slight change in phrasing can completely throw off the retrieval accuracy when using pure vector distance.

Improving embedding accuracy with RAG

So, our next step, which we will cover in the next article is to test more capable models, such as text-embedding-3-large. The large model has significantly better semantic resolution and is more likely to capture the subtle differences that matter in technical content. However, relying solely on vector similarity, even with a better model, has limitations. This is where Retrieval-Augmented Generation (RAG) comes in, which we will discuss in a later article after we have examined some different models to improve our embeddings.

So, our next step, which we will cover in the next article is to test more capable models, such as text-embedding-3-large. The large model has significantly better semantic resolution and is more likely to capture the subtle differences that matter in technical content. However, relying solely on vector similarity, even with a better model, has limitations. This is where Retrieval-Augmented Generation (RAG) comes in, which we will discuss in a later article after we have examined some different models to improve our embeddings.

RAG enhances search by retrieving several top candidate chunks (not just the closest one or two) and feeding them into a large language model, which can then generate an answer using the retrieved context. This approach dramatically improves results because the LLM can resolve ambiguities, combine information across multiple sections, and compensate for imperfect retrieval.

Moving towards a RAG system would allow us to bridge the gap between simple vector search and a fully conversational, context-aware assistant. By combining semantic retrieval with generative reasoning, we can deliver much more accurate and helpful answers, especially in complex environments like technical documentation.

While we did use an LLM to generate our embeddings that we stored locally, this is a completely different role to what the LLM plays when we use it (infer it) as a generative model. When used to generate embeddings, it is just giving us vector that represents a word’s meaning in multi-dimensional space, we don’t get reasoning or answers. When we use it as a generative model, we use it as a decoder to generate token by token answers which utilises its trained knowledge to produce a meaningful response.

Summary

I used Fujitsu Enterprise Postgres manuals for testing, and found that markup-based chunking was the most effective primary strategy. This approach preserved the semantic and logical structure of the document, which helped maintain context and deliver more complete answers. To complement this, I applied token-aware chunking as a secondary layer to ensure that no chunk exceeded the token limits of the language model.

I used Fujitsu Enterprise Postgres manuals for testing, and found that markup-based chunking was the most effective primary strategy. This approach preserved the semantic and logical structure of the document, which helped maintain context and deliver more complete answers. To complement this, I applied token-aware chunking as a secondary layer to ensure that no chunk exceeded the token limits of the language model.

In practice, combining both methods delivers the best of both worlds: logically coherent chunks that remain within the processing limits of modern LLMs. By storing the chunking method as metadata, you can track, evaluate, and fine-tune your retrieval strategy over time without disrupting the overall system.

This combined approach creates a highly maintainable and scalable embedding framework, ideal for building the retrieval layer of a PostgreSQL-powered RAG interface. It’s flexible enough to support multiple manual versions, multi-language content, and more advanced retrieval pipelines as your system evolves.

In the next article, I’ll explore how different LLMs perform when generating embeddings and take the next step towards a fully realised RAG system by using an LLM as a reasoning engine to further improve retrieval quality.

Other blog posts in this series

- Why Enterprise AI starts with the database - Not the data swamp

- Understanding similarity vs distance in PostgreSQL vector search

- What is usually embedded in vector search: Sentences, words, or tokens?

- How to store and query embeddings in PostgreSQL without losing your mind

- From embeddings to answers: How to use vector embeddings in a RAG pipeline with PostgreSQL and an LLM

- Improving RAG in PostgreSQL: From basic retrieval to smarter context

When working with embeddings in PostgreSQL, particularly for use cases like semantic search, recommendation systems, or retrieval-augmented generation (RAG), how you prepare and ingest data matters just as much as how you query it.

When working with embeddings in PostgreSQL, particularly for use cases like semantic search, recommendation systems, or retrieval-augmented generation (RAG), how you prepare and ingest data matters just as much as how you query it.