Learn how to enhance your PostgreSQL searches with Retrieval-Augmented Generation (RAG) for precise, context-aware answers using vector embeddings and Large Language Models (LLM).

We explored how to embed data into PostgreSQL using pgvector, and how to perform similarity searches to return the most relevant rows. This gave us a powerful new way to interact with our data using semantic meaning rather than rigid keyword matches. We could search for “good offshore sailing boats” and get back Tayana 37s and Vancouver 32s, even if the exact phrase “offshore sailing” didn’t appear in the database.

But we also hit a limitation

This is where Retrieval-Augmented Generation (RAG) takes us to the next level.

RAG builds on vector search, but adds reasoning. It allows us to embed the user query as before, use semantic similarity to retrieve relevant documents or records, and then pass that retrieved context into a language model like ChatGPT to generate a coherent, grounded response. This is where things really change. Instead of returning raw snippets or document chunks and leaving the user to interpret them, the model synthesises an answer from the retrieved data bringing reasoning, fluency, and domain knowledge into the equation.

When we say the answer is grounded we mean that it’s anchored in real, dynamic, and verifiable information drawn from our own systems at the point of inference. The response isn’t a hallucination or a best guess—it’s based on actual listings, descriptions, specifications, or inspection reports we’ve embedded and stored. This ability to justify an answer with traceable evidence by citing source snippets, reflecting original phrasing, and linking back to the underlying documents is essential for enterprise use. It builds trust with users, supports compliance in regulated industries, and gives teams confidence that the AI isn’t making things up. Whether you’re advising a customer on a boat purchase or summarizing an audit record for a compliance officer, grounding the output with explainable, inspectable inputs is fast becoming a baseline requirement for production-grade systems.

The RAG difference

Let’s return to the boat broker example to illustrate the difference.

Suppose a buyer asks, “What are the best bluewater cruisers under 40 feet with a reputation for safety?” Using vector search alone, we’d embed the query, find the most similar boat descriptions, and return the top five matches. That might include boats like the Tayana 52, Cape George 36, and Pacific Seacraft 34. But the returned records would still be raw fragments of text from listings. It would be up to the user to interpret them, weigh the pros and cons, and draw conclusions.

With RAG, we can go further. We still perform the same semantic search over the PostgreSQL vector store, but now we take those retrieved descriptions and format them into a structured prompt. We might include three or four matching boat descriptions, then ask the LLM to compare them, highlight the ones under 40 feet, and focus on safety and offshore pedigree.

The language model now has the raw information plus a clear instruction. It can reason over the retrieved content, discard irrelevant boats, and highlight the most relevant features, all in one fluent, natural-language response. This is no longer a search engine returning candidates. It’s a domain-aware assistant giving advice.

This shift from retrieval to retrieval plus generation is powerful. It’s what turns vector search from a backend trick into a front-end capability. The model can infer meaning, handle ambiguity, and provide an explanation. And the grounding step of injecting retrieved content into the model ensures it isn’t hallucinating.

Bridging the gap between data and decisions

The beauty of this setup is that it doesn’t require changing your database schema or moving your data to a third-party vector store. If you’re already storing your embeddings in PostgreSQL using pgvector, you’re ready to implement RAG. All you need is a small service layer that embeds the user query, performs the vector search, assembles the top matches, and calls the language model API with a well structured prompt.

This is how we bridge the gap between data and decisions. PostgreSQL gives us fast, flexible retrieval. The LLM gives us structured, context-aware reasoning. Together, they allow us to build smart applications that don’t just return documents—they return answers.

Let’s put this into practice by creating a simple Python script that connects the following pieces together: embedding a user query, searching PostgreSQL, assembling results, and prompting the LLM. You’ll be able to adapt it for your own use case, whether it’s searching technical manuals or navigating any other domain with complex, fuzzy questions and deeply structured data.

Let's see an actual example

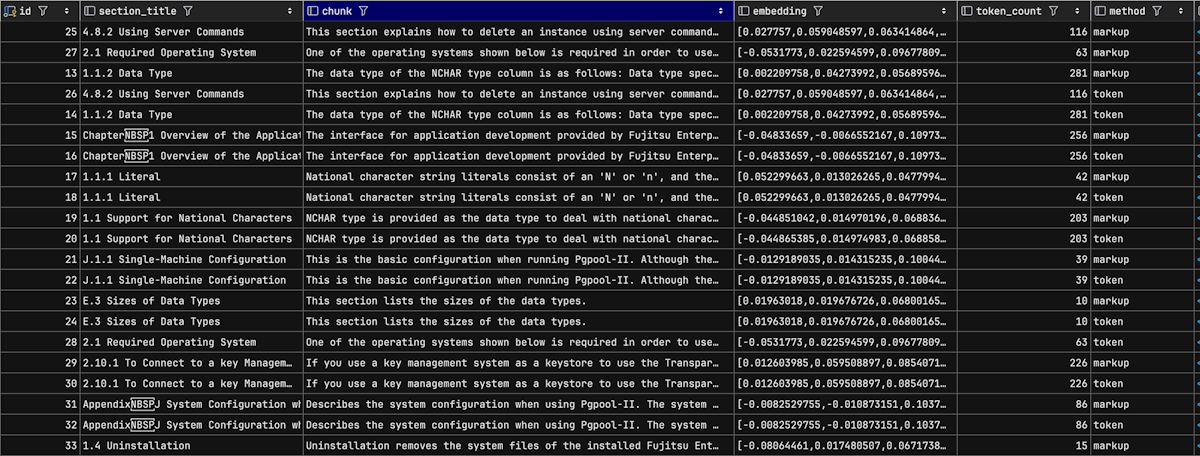

We are going to build on our previously created manual_chunks table, which stores pre-processed excerpts from the Fujitsu Enterprise Postgres manuals. Each row contains a small section of documentation that we’ve already chunked, tokenized, and embedded using OpenAI’s text-embedding-3-small model.

The resulting vectors are stored in the embedding column using PostgreSQL’s pgvector extension. This gives us a searchable semantic index that we can now use to find relevant information based on meaning, not just matching keywords. The manual_chunks table is our knowledge base, and it acts as the foundation for grounding responses in the RAG pipeline we’re about to build.

We start by loading environment variables and configuring access to both OpenAI and our PostgreSQL instance. We use the dotenv library to avoid hardcoding secrets like API keys, and psycopg2 to interact with the database. OpenAI's Python SDK will handle both embedding and generation later in the script.

You can download the Python script in this example here.

import psycopg2

from openai import OpenAI

from dotenv import load_dotenv

# Load OpenAI API key from .env file

load_dotenv()

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

# PostgreSQL connection config

PG_CONN_INFO = {

"host": "localhost",

"port": 5432,

"dbname": "pgdevai",

"user": "garyevans",

"password": "***********"

}

Next, we define a function to embed the user’s question. This is a simple call to the OpenAI Embeddings API using the same model we used to embed the chunk field in our manual_chunks table. The result is a vector, essentially a numerical representation of the query’s meaning, that we can use to compare against the stored embeddings in our table.

def embed_query(text):

response = client.embeddings.create(

input=text,

model="text-embedding-3-small"

)

return response.data[0].embedding

With that query embedding in hand, we connect to the PostgreSQL database and run a vector similarity search using the <-> operator provided by the pgvector extension. This returns the top matching rows based on semantic similarity, meaning the model can find relevant content even if the words don’t exactly match. We also retrieve the section_title, chunk, method, and section_anchor fields to give us enough context to later construct a meaningful prompt.

def retrieve_chunks(query_vector, top_nbr=5):

with psycopg2.connect(**PG_CONN_INFO) as conn:

with conn.cursor() as cur:

cur.execute("""

SELECT section_title, chunk, method, section_anchor

FROM production.manual_chunks

WHERE embedding IS NOT NULL

ORDER BY embedding <-> %s::vector

LIMIT %s;

""", (query_vector, top_nbr))

return cur.fetchall()

Each of these retrieved chunks is a small slice of documentation that’s been pre-chunked, embedded, and stored in the manual_chunks table. Our goal isn’t just to return them, we’ll use them as raw material to generate a response that’s helpful to the user.

With our building blocks in place—query embedding, semantic retrieval, and chunk selection—we tie everything together in the main function. This is the entry point of our script and represents the basic RAG loop in action. The user is prompted for a question, which we embed and use to search the manual_chunks table for the most semantically relevant documentation slices. These chunks will form the context that we pass to the language model to guide its answer. So we’ve transformed an open-ended natural language query into structured context retrieved from our own Fujitsu Enterprise Postgres manuals.

if __name__ == "__main__":

query = input("Enter your question: ").strip()

embedded = embed_query(query)

results = retrieve_chunks(embedded)

answer = generate_response(query, results)

print(answer)

What happens next, however, is where things can really diverge. The way we construct the prompt and instruct the language model to respond has a significant impact on the quality and relevance of the final output. A generic prompt may lead to vague summaries or repetitive content, while a well-structured and targeted prompt can produce clear, grounded, and actionable answers. So, we’ll now look at two possible generate_response functions and explore how prompt design plays a critical role in making RAG pipelines useful.

Here is one example of the generate_reponse function containing a basic neutral-sounding prompt that simply describes the assistant’s role and lists the retrieved chunks, then asks the model to provide a helpful answer.

context = "\n\n".join([

f"{i+1}. {title} (Method: {method})\n{chunk.strip()}"

for i, (title, chunk, method, anchor) in enumerate(chunks)

])

prompt = f"""You are a helpful technical assistant. A user has asked a question about Fujitsu Enterprise Postgres behaviour.

User query: "{user_query}"

Here are the most relevant documentation chunks:

{context}

Using the above information, provide a well-structured and grounded answer.

"""

response = client.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": "You are a precise and helpful technical assistant."},

{"role": "user", "content": prompt}

],

temperature=0.3

)

return response.choices[0].message.content

While this has worked to an extent, the results are a little underwhelming. The model mostly echoed back the content we had retrieved, lightly rephrasing or summarising it, without offering much in the way of reasoning, filtering, or decision-making. It behaved more like a polite copy-paste engine than a truly useful assistant.

python manualrag1.py

Enter your question: What are the prerequisites for FEP

Embedding query...

Retrieving relevant chunks from PostgreSQL...

Generating answer...

=== Answer ===

Based on the provided documentation, the prerequisites for using the FEP (Front End Processor) seem to be as follows:

1. **Keystore Access**: The FEP instance requires access to a keystore for passing confidential credentials. This can be done using the `pgx_open_keystore` function, at server startup prompt, or using obfuscated files.

2. **Plug-in Trustworthiness**: The plug-ins that are used with the FEP run with the same ownership as the user running the FEP server. Therefore, it is the database administrator's responsibility to ensure that the plug-in is trustworthy.

3. **Plug-in Efficiency**: The plug-ins must be capable of completing operations in a timely manner and returning a response. This is important as multiple plug-ins can be invoked at the same time.

4. **Sensitive Information Handling**: The plug-ins receive confidential credentials as environment variables. However, the system advises against using the feature of passing arbitrary values as arguments when calling the plug-in for passing sensitive information.

5. **Authentication and Authorization**: The authentication and authorization of access to the key management system depends on the implementation of the plug-in. This suggests that the plug-in needs to have appropriate mechanisms in place for authentication and authorization.

Please note that the specifics of these prerequisites might vary based on the exact context and usage of the FEP in your system.

In the next example, we address this by redesigning the prompt to be far more directive. We tell the model explicitly to identify which documentation sections answer the question, to extract only relevant details, to avoid repeating full paragraphs, and to synthesize a grounded, structured answer. We also reminded it not to guess if the information was unclear.

context = "\n\n".join([

f"[{i+1}] Section: {title}\nMethod: {method}\n{chunk.strip()}"

for i, (title, chunk, method, anchor) in enumerate(chunks)

])

prompt = f"""

You are a technical assistant answering a user question using internal technical manuals.

User's Question:

"{user_query}"

Below are retrieved documentation chunks from a semantic search:

{context}

INSTRUCTIONS:

- Read the retrieved content.

- Determine which sections answer the question directly.

- Extract only the information relevant to the question.

- Synthesize a grounded and helpful answer.

- Do NOT repeat full paragraphs.

- Use bullet points or summarize as needed.

- If the content is incomplete or unclear, say so.

Answer:

"""

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[

{"role": "system", "content": "You are a precise and helpful technical assistant who explains only what is supported by the provided documentation."},

{"role": "user", "content": prompt}

],

temperature=0.3

)

return response["choices"][0]["message"]["content"]

The result is noticeably better. The model not only focused on the relevant content—it structured its response clearly, avoided fluff, and presented the answer in a useful format.

The prerequisites for installing Fujitsu Enterprise Postgres, as per the provided documentation, are:

- Preparation as described in Section 4.2, "Preparations for Setup". The exact details of these preparations are not specified in the provided excerpts.

- A hardware environment that includes at least 512 MB of memory, as stated in Section 2.5, "Hardware Environment".

Please refer to the full documentation for a comprehensive list of prerequisites and detailed instructions.

Process finished with exit code 0

Before I go...

Other blog posts in this series

- Why Enterprise AI starts with the database - Not the data swamp

- Understanding similarity vs distance in PostgreSQL vector search

- What is usually embedded in vector search: Sentences, words, or tokens?

- How to store and query embeddings in PostgreSQL without losing your mind

- Embedding content in PostgreSQL using Python: Combining markup chunking and token-aware chunking

- Improving RAG in PostgreSQL: From basic retrieval to smarter context