PostgreSQL 14 provides the LZ4 compression option for columns. It results in faster compression compared to the existing PGLZ in TOAST. In this blog post I will describe how to use this option, and compare its performance with other methods.

PostgreSQL 14 provides the LZ4 compression option for columns. It results in faster compression compared to the existing PGLZ in TOAST. In this blog post I will describe how to use this option, and compare its performance with other methods.

Background

In PostgreSQL, a page is the basic unit to store data, and the size of each page is 8 kB by default. Basically, data in one row is not allowed to be stored across pages. However, some data types have variable length, and they may exceed the size of one page. To overcome this limitation, large field values are compressed and/or broken up into multiple physical rows. This technique is known as TOAST (The Oversized-Attribute Storage Technique)![]() .

.

![]() By default, TOAST is triggered only if there are variable-length columns in a table, and the size of the row data exceeds TOAST_TUPLE_THRESHOLD (normally 2 kB). First, data will be compressed. Then, if data is still too large, it will be stored out-of-line. Be aware that if the storage strategy for columns is specified as EXTERNAL/PLAIN, compression will be disabled.

By default, TOAST is triggered only if there are variable-length columns in a table, and the size of the row data exceeds TOAST_TUPLE_THRESHOLD (normally 2 kB). First, data will be compressed. Then, if data is still too large, it will be stored out-of-line. Be aware that if the storage strategy for columns is specified as EXTERNAL/PLAIN, compression will be disabled.

Before PostgreSQL 14, TOAST supported only one compression algorithm - PGLZ, a PostgreSQL built-in algorithm. But other compression algorithms may be faster or have higher compression ratios than PGLZ.

But as of PostgreSQL 14 we have another option: LZ4 compression![]() - a lossless compression algorithm known for its speed. So, we can expect it to help improving the speed of compression and decompression in TOAST.

- a lossless compression algorithm known for its speed. So, we can expect it to help improving the speed of compression and decompression in TOAST.

How to use LZ4?

In order to use the newly added LZ4 compression feature in PostgreSQL14, you will need to install the libraries related to LZ4 into the OS, and specify the --with-lz4 option when compiling and packaging PostgreSQL.

Setting LZ4 as the default compression algorithm

You can specify the compression algorithm for TOAST in the PostgreSQL instance by configuring the GUC parameter default_toast_compression. You can either modify postgresql.conf or use the SET command to change it for the current client connection (session) only.

SET

Setting the column compression algorithm on CREATE TABLE

postgres(# col1 text COMPRESSION pglz,

postgres(# col2 text COMPRESSION lz4,

postgres(# col3 text);

CREATE TABLE

postgres=# \d+ tbl

Table "public.tbl"

Column | Type | … | Storage | Compression | …

-------+---------+---+----------+-------------+ …

id | integer | | plain | |

col1 | text | | extended | pglz |

col2 | text | | extended | lz4 |

col3 | text | | extended | |

Access method: heap

We used \d+ (a describe command) to show the compression algorithm of all columns. If the column does not support compression or no compression algorithm is specified, a blank is displayed in the Compression column.

In the example above, we see that column id does not support compression, col1 uses PGLZ, col2 uses LZ4, and col3 has no compression algorithm specified and so it will use the default compression algorithm.

Changing the column compression algorithm on ALTER TABLE

The compression algorithm of an existing column can be changed using ALTER TABLE, but note that the new compression algorithm will be applied only to data inserted after executing ALTER TABLE command.

INSERT 0 1

postgres=# ALTER TABLE tbl ALTER COLUMN col1 SET COMPRESSION lz4;

ALTER TABLE

postgres=# INSERT INTO tbl VALUES (2, repeat('abc',1000), repeat('abc',1000),repeat('abc',1000));

INSERT 0 1

postgres=# SELECT id,

postgres-# pg_column_compression(id) AS compression_colid,

postgres-# pg_column_compression(col1) AS compression_col1,

postgres-# pg_column_compression(col2) AS compression_col2,

postgres-# pg_column_compression(col3) AS compression_col3

postgres-# FROM tbl;

id | compression_colid | compression_col1 | compression_col2 | compression_col3

---+-------------------+------------------+------------------+------------------

1 | | pglz | lz4 | lz4

2 | | lz4 | lz4 | lz4

(2 rows)

Above we see that in the row that was inserted before we changed the compression algorithm, col1 is still compressed using PGLZ, even after we changed the compression from PGLZ to LZ4.

Notes

- If you insert data from other tables, for example, using CREATE TABLE ... AS ..., or INSERT INTO ... SELECT ..., the compression method of the inserted data will remain the same as the original data.

- While supporting LZ4, pg_dump and pg_dumpall also add the option --no-toast-compression, which will not dump TOAST compression option when used.

Performance comparison

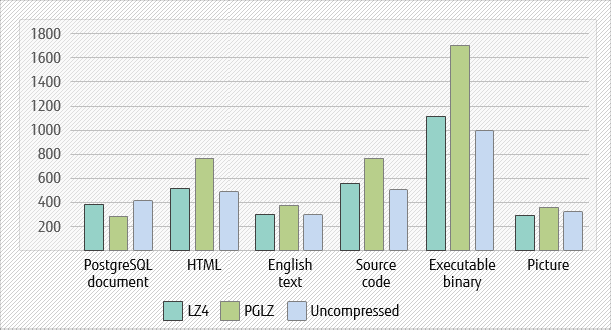

Here I did some tests to compare LZ4 with PGLZ in terms of both compression ratio and compression speed. As a reference, I added the test results for uncompressed data (specifying storage strategy as EXTERNAL). For uncompressed data, there is no time consumed for compression and decompression, but accordingly, time spent on reading and writing data increases.

Preparation

The following data was used in the tests:

- PostgreSQL documents (one HTML file per row)

- Data provided by Silesia Corpus

, including:

, including:

- HTML

- Text

- Source code

- Executable binary

- Picture

The processor spec of the test server is Intel® Xeon® Silver 4210 CPU @2.20GHz with 10 cores/20 threads/2 sockets.

I used pgbench ![]() to measure SQL execution times, and the system function pg_table_size

to measure SQL execution times, and the system function pg_table_size![]() to check table sizes (note: the command VACUMM FULL was executed before each test to clean the data storage) .

to check table sizes (note: the command VACUMM FULL was executed before each test to clean the data storage) .

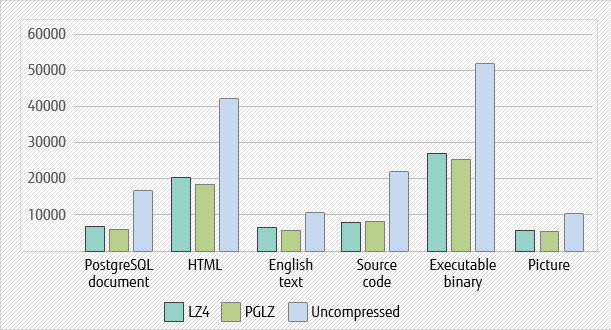

Compression ratio

The compression ratios of both PGLZ and LZ4 are related to duplicate data - the more duplicate items there are, the higher the compression ratio is.

However, compression will not be performed in cases where PostgreSQL evaluates that the resulting ratio would not be good, even if data size reaches the threshold. This is so because compression would not save disk space effectively, and would instead result in additional time and resources to decompress.

According to the current source code of PostgreSQL14, PGLZ requires a compression ratio of at least 25%, while LZ4 requires only that the compressed data to be no larger than uncompressed data.

I compared table sizes using compression algorithms LZ4 and PGLZ, against an uncompressed one. We can see that in most cases, the compression ratio of PGLZ is slightly better - on average, the compression ratio of PGLZ is 2.23, and the compression ratio of LZ4 is 2.07. That means that compared to LZ4, PGLZ can save about 7% of disk space.

Compression/decompression speed

TOAST data is compressed/decompressed while being inserted/queried. So, I executed some SQL statements to see the impact of different compression algorithms on the compression/decompression speed.

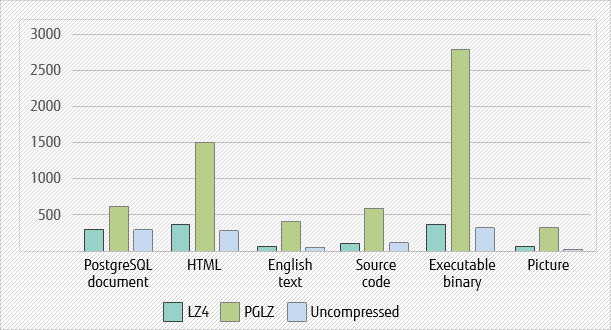

INSERT statements

First, I compared the performance of INSERT statements in columns compressed using LZ4, using PGLZ, and using no compression.

I saw that LZ4 takes slightly more time to insert data than uncompressed one, while the time spent on data insertion increases significantly when using PGLZ. On average, LZ4 compression only takes about 20% of the time spent by PGLZ. This is a very significant improvement.

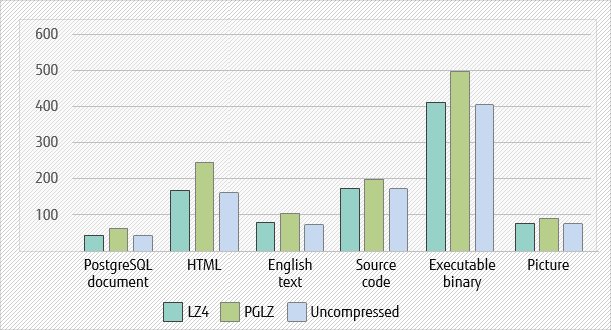

SELECT statements

Next, I compared the performance of SELECT statements using the same column compression settings.

The result was that while querying the data, LZ4 reduces the time by about 20% when compared to PGLZ, and there is no obvious increase compared to uncompressed one. It seems that time spent on decompression has been reduced to a very low level.

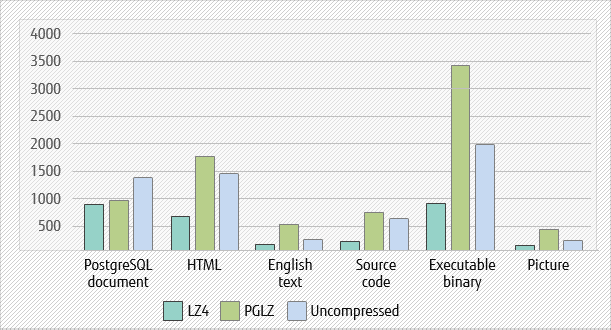

INSERT statements with 16 clients

Another common scenario that I tested was accessing the database from multiple clients - 16 in this case.

What I found out, as can be seen below, is that compression performance of single large files (HTML, English text, source code, executable binary, pictures) using LZ4 was 60% to 70% faster compared to PGLZ, and that there was also a small improvement while inserting multiple small files (PostgreSQL document).

There was also a significant improvement compared to uncompressed one, which I guess is because using compression reduces the amount of data written to disk.

SELECT statements with 16 clients

I then tested the performance of SELECT statements in the multiple-client query scenario.

Again, LZ4 performed better than PGLZ in most cases.

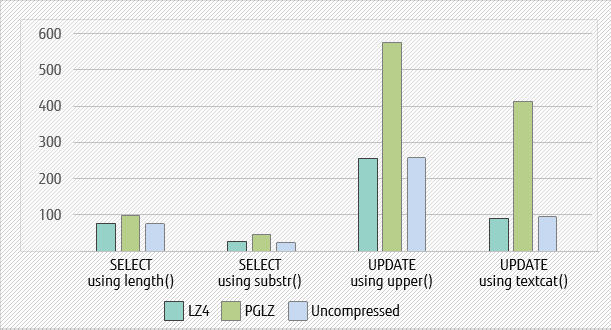

String functions

Finally, I compared the speed of text processing by executing SELECT and UPDATE statements using string functions.

In this scenario, LZ4 outperformed PGLZ. And the time spent on each function with LZ4-compressed data was almost the same as uncompressed data, which means that LZ4 compression barely affects string operation speed.

Conclusion

Compared with PGLZ, LZ4 is more efficient in compressing and decompressing TOAST data, and it presents excellent performance - query speed is close to that of uncompressed data, and the speed of data insertion is 80% faster compared to PGLZ. Of course, compression ratio is sacrificed in some scenarios, but if you want to improve the execution speed, I strongly recommend LZ4 over PGLZ.

Note that you should consider whether the data in tables is suitable for compression. If the compression ratio is not good, it will still try to compress the data and then give it up. This will cause an extra waste of memory resource and greatly affect the speed of inserting data.

For the future

The performance of compression and decompression is improved greatly with LZ4 compression support for TOAST.

In addition to LZ4, there are also some other excellent compression algorithms, such as Zstandard. With the support for Zstandard, users can get an even better compression ratio compared with PGLZ. Another one is LZ4 HC, which has an average compression speed of 98.5% of LZ4's decompression speed, but substantially improves the compression ratio. I hope there will be more compression algorithm choices in future PostgreSQL versions, so users can freely choose according to their needs.

Besides TOAST, there is also a need to use compression in some other scenarios. As far as I know, LZ4 compression for WAL has already been supported in the current development version![]() , which is very exciting.

, which is very exciting.