In the session, I walked through the evolution and mechanics of logical replication in PostgreSQL—starting with a quick overview of replication concepts, then diving into logical replication slots, failover and switchover strategies, and ultimately exploring how PostgreSQL 17 introduces native support for failover slots, including configuration tips, synchronization methods (both manual and automatic), and the steps needed to ensure your setup is failover-ready.

Let's get to the details: Ensuring High Availability of Logical Replication in PostgreSQL 17 with failover logical slots

Below, you'll find the slides from my presentation at PGConf India 2025.

Click to view the slides side by side

Click to view the slides side by side Top to bottomClick to view the slides in vertical orientation

Top to bottomClick to view the slides in vertical orientation

Failover Logical Slots - Ensuring High Availability of Logical Replication in PostgreSQL 17

Nisha Moond

Application Developer

Fujitsu

Agenda

- Logical Replication overview

- Logical Replication slots

- Failover & Switchover basics

- Switchover in PostgreSQL16

- Synchronization of Logical Replication slots

- Configuring failover slots in PostgreSQL 17

- Synchronization methods: manual & automatic

- Steps to ensure failover readiness

- Switchover in PostgreSQL 17

- Summary

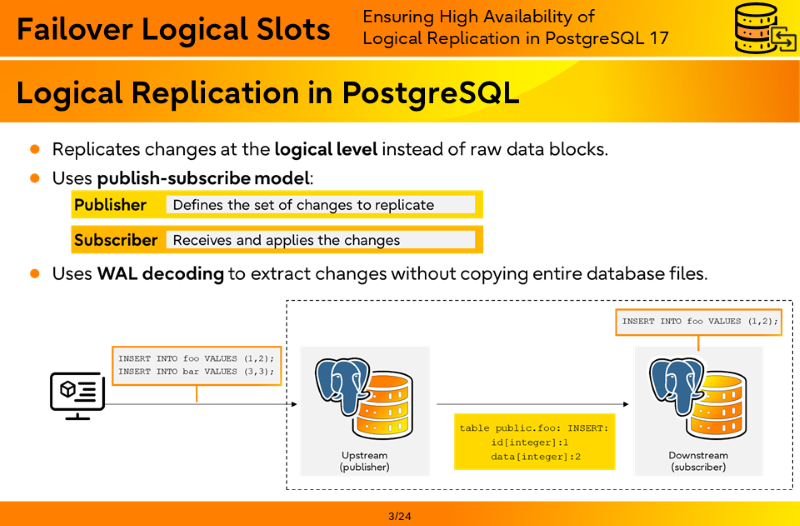

Logical Replication in PostgreSQL

- Replicates changes at the logical level instead of raw data blocks.

- Uses publish-subscribe model:

- Publisher - Defines the set of changes to replicate

- Subscriber - Receives and applies the changes

- Uses WAL decoding to extract changes without copying entire database files.

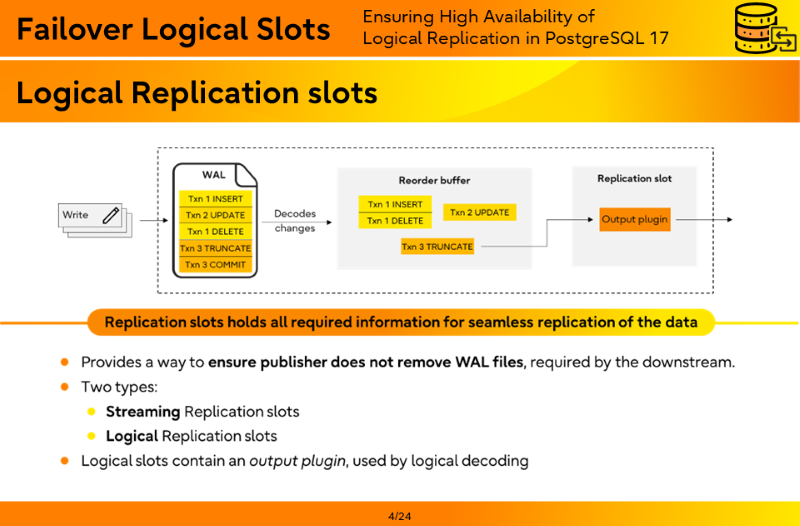

Logical Replication slots

Replication slots holds all required information for seamless replication of the data

- Provides a way to ensure publisher does not remove WAL files, required by the downstream.

- Two types:

- Streaming Replication slots

- Logical Replication slots

- Logical slots contain an output plugin, used by logical decoding.

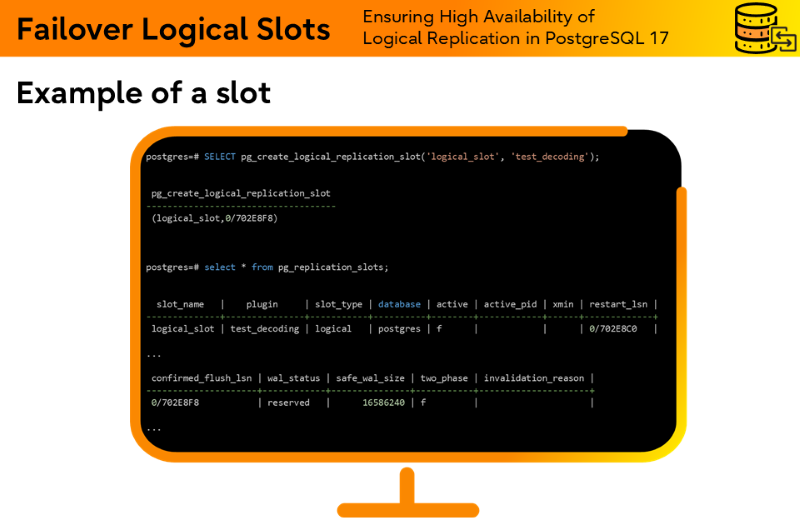

Example of a slot

postgres=# SELECT pg_create_logical_replication_slot('logical_slot', 'test_decoding');

pg_create_logical_replication_slot

------------------------------------

(logical_slot,0/702E8F8)

postgres=# SELECT * FROM pg_replication_slots;

slot_name | plugin | slot_type | database | active | active_pid | xmin | restart_lsn |

--------------+---------------+-----------+----------+--------+------------+------+-------------+

logical_slot | test_decoding | logical | postgres | f | | | 0/702E8C0 |

...

confirmed_flush_lsn | wal_status | safe_wal_size | two_phase | invalidation_reason |

---------------------+------------+---------------+-----------+---------------------+

0/702E8F8 | reserved | 16586240 | f | |

...

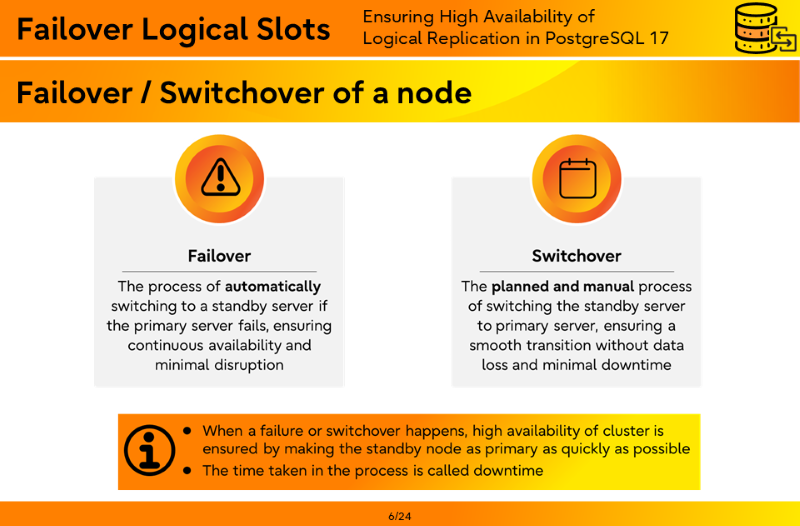

Failover / Switchover of a node

- Failover- The process of automatically switching to a standby server if the primary server fails, ensuring continuous availability and minimal disruption

- Switchover - The planned and manual process of switching the standby server to primary server, ensuring a smooth transition without data loss and minimal downtime

- When a failure or switchover happens, high availability of cluster is ensured by making the standby node as primary as quickly as possible

- The time taken in the process is called downtime.

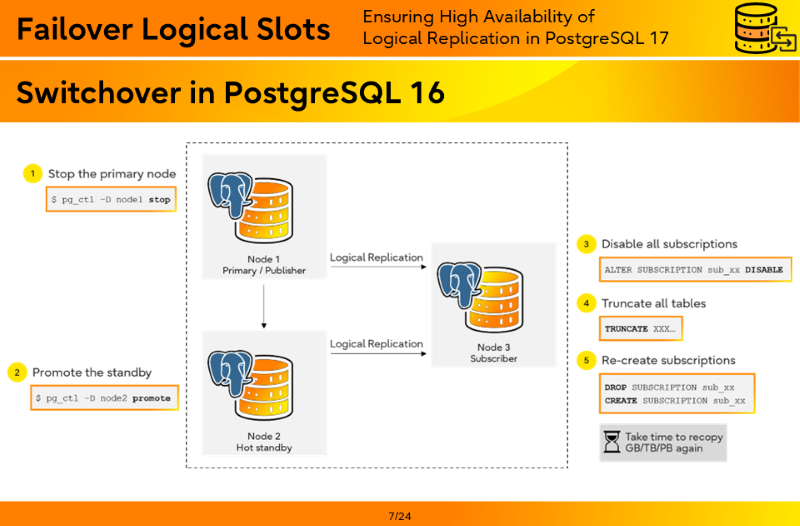

Switchover in PostgreSQL 16

- Stop the primary node

$ pg_ctl –D node1 stop

- Promote the standby

$ pg_ctl -D node2 promote

- Disable all subscriptions

ALTER SUBSCRIPTION sub_xx DISABLE

- Truncate all tables

TRUNCATE XXX…

- Re-create subscriptions

DROP SUBSCRIPTION sub_xx CREATE SUBSCRIPTION sub_xx

Take time to recopy GB/TB/PB again

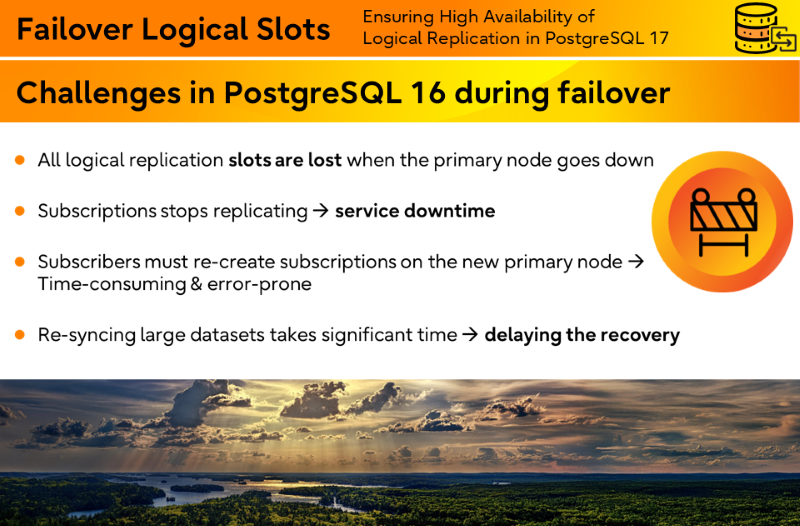

Challenges in PostgreSQL 16 during failover

- All logical replication slots are lost when the primary node goes down

- Subscriptions stops replicating → service downtime

- Subscribers must re-create subscriptions on the new primary node → Time-consuming & error-prone

- Re-syncing large datasets takes significant time → delaying the recovery

Synchronization of logical slots to standby

- Sync the logical replication slots to the standby server in real-time

- Eliminates the need of re-creating the subscriptions and re-syncing of data

- Minimizes the failover or switchover processes time

- Ensures new primary( the promoted standby) server isn't lagging from subscribers

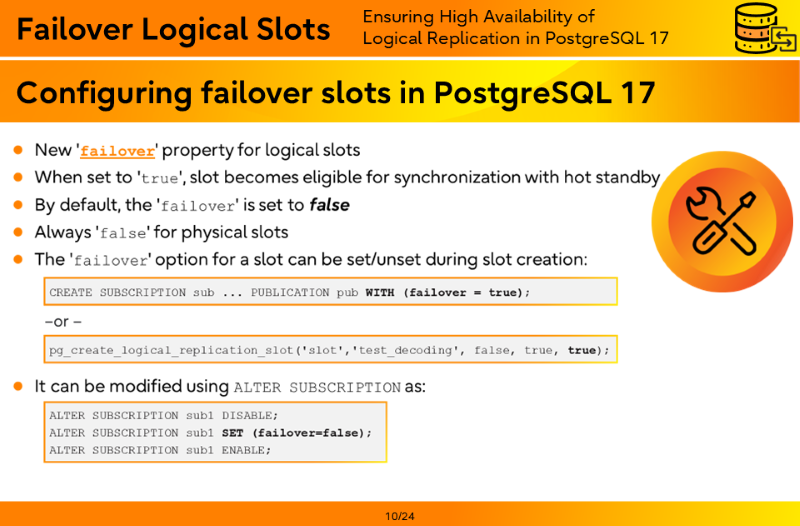

Configuring failover slots in PostgreSQL 17

- New failover property for logical slots

- When set to true, slot becomes eligible for synchronization with hot standby

- By default, the failover is set to false

- Always false for physical slots

- The failover option for a slot can be set/unset during slot creation:

CREATE SUBSCRIPTION sub ... PUBLICATION pub WITH (failover = true);

- or -

pg_create_logical_replication_slot('slot','test_decoding', false, true, true); - It can be modified using ALTER SUBSCRIPTION as:

ALTER SUBSCRIPTION sub1 DISABLE; ALTER SUBSCRIPTION sub1 SET (failover=false); ALTER SUBSCRIPTION sub1 ENABLE;

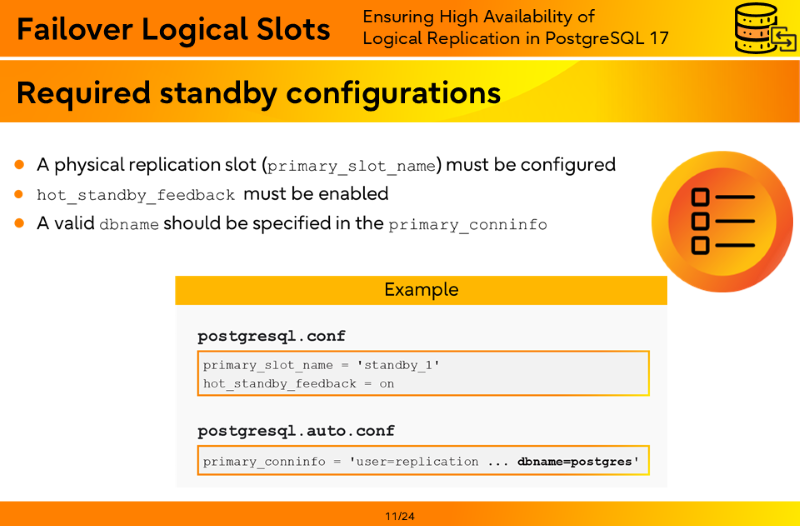

Required standby configurations

- A physical replication slot (primary_slot_name) must be configured

- hot_standby_feedback must be enabled

- A valid dbname should be specified in the primary_conninfo

Example

postgresql.conf

primary_slot_name = 'standby_1' hot_standby_feedback = on

postgresql.auto.conf

primary_conninfo = 'user=replication ... dbname=postgres'

Methods for slot synchronization

Manual synchronization

- Call SQL function pg_sync_replication_slots() on the hot standby

- The process will connect to primary node

- Fetches eligible remote slots from primary

- Drop the obsolete synced slots on standby

- Create new or update already 'synced' slots

- Needs manual intervention

- Not allowed when auto sync is enabled

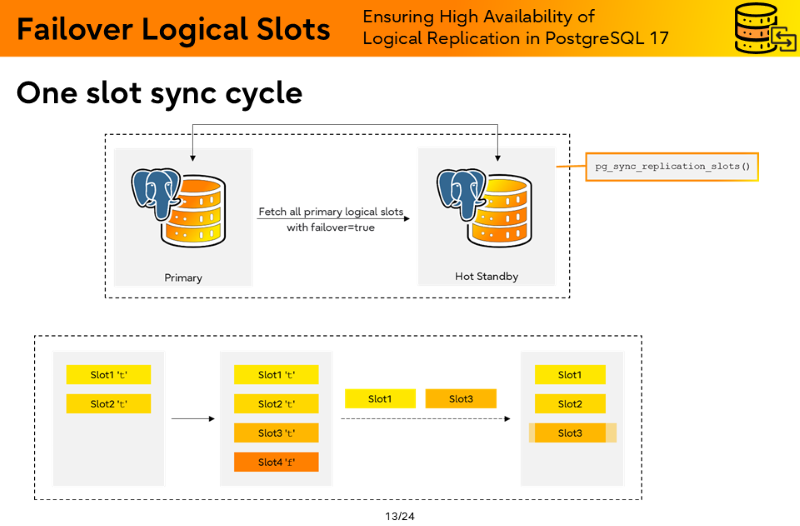

One slot sync cycle

Fetch all primary logical slots with failover=true

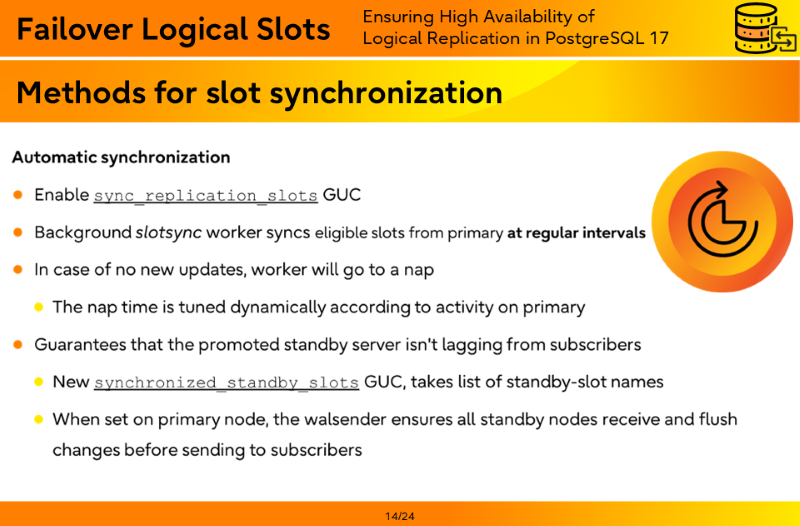

Methods for slot synchronization

Automatic synchronization

- Enable sync_replication_slots GUC

- Background slotsync worker syncs eligible slots from primary at regular intervals

- In case of no new updates, worker will go to a nap

- The nap time is tuned dynamically according to activity on primary

- Guarantees that the promoted standby server isn't lagging from subscribers

- New synchronized_standby_slots GUC, takes list of standby-slot names

- When set on primary node, the walsender ensures all standby nodes receive and flush changes before sending to subscribers

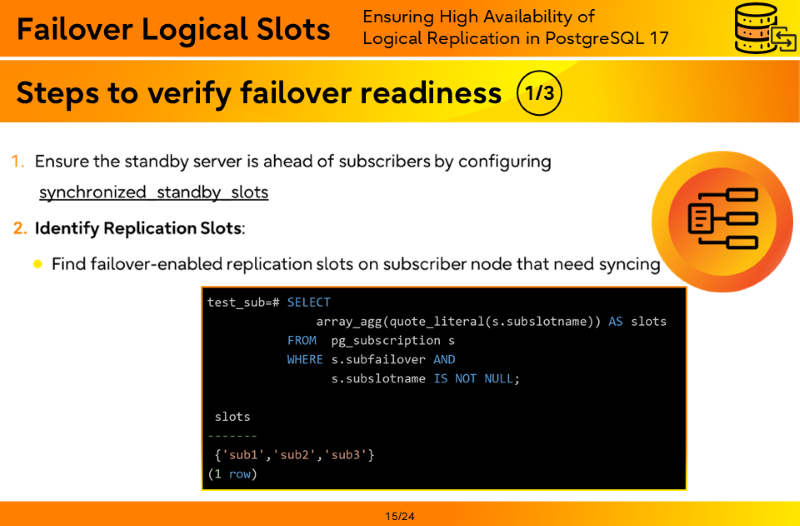

Steps to verify failover readiness

- Ensure the standby server is ahead of subscribers by configuring synchronized_standby_slots

- Identify Replication Slots:

- Find failover-enabled replication slots on subscriber node that need syncing

test_sub=# SELECT

array_agg(quote_literal(s.subslotname)) AS slots

FROM pg_subscription s

WHERE s.subfailover AND

s.subslotname IS NOT NULL;

slots

-------

{'sub1','sub2','sub3'}

(1 row)

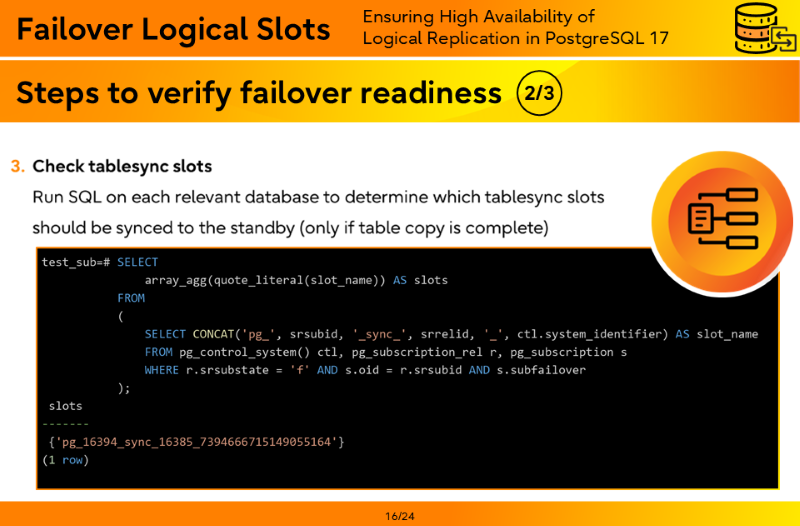

Steps to verify failover readiness

- Check tablesync slots

Run SQL on each relevant database to determine which tablesync slots should be synced to the standby (only if table copy is complete)

test_sub=# SELECT

array_agg(quote_literal(slot_name)) AS slots

FROM

(

SELECT CONCAT('pg_', srsubid, '_sync_', srrelid, '_', ctl.system_identifier) AS slot_name

FROM pg_control_system() ctl, pg_subscription_rel r, pg_subscription s

WHERE r.srsubstate = 'f' AND s.oid = r.srsubid AND s.subfailover

);

slots

-------

{'pg_16394_sync_16385_7394666715149055164'}

(1 row)

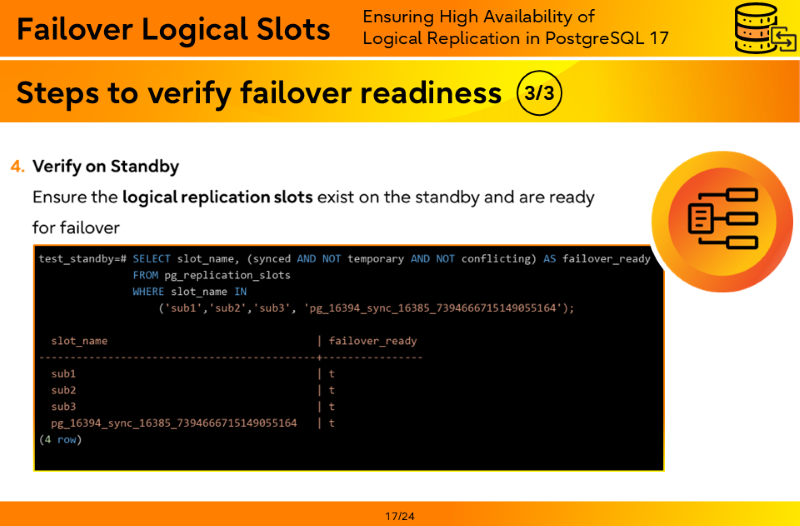

Steps to verify failover readiness

- Verify on Standby

Ensure the logical replication slots exist on the standby and are ready for failover

test_standby=# SELECT slot_name, (synced AND NOT temporary AND NOT conflicting) AS failover_ready

FROM pg_replication_slots

WHERE slot_name IN

('sub1','sub2','sub3', 'pg_16394_sync_16385_7394666715149055164');

slot_name | failover_ready

--------------------------------------------+----------------

sub1 | t

sub2 | t

sub3 | t

pg_16394_sync_16385_7394666715149055164 | t

(4 row)

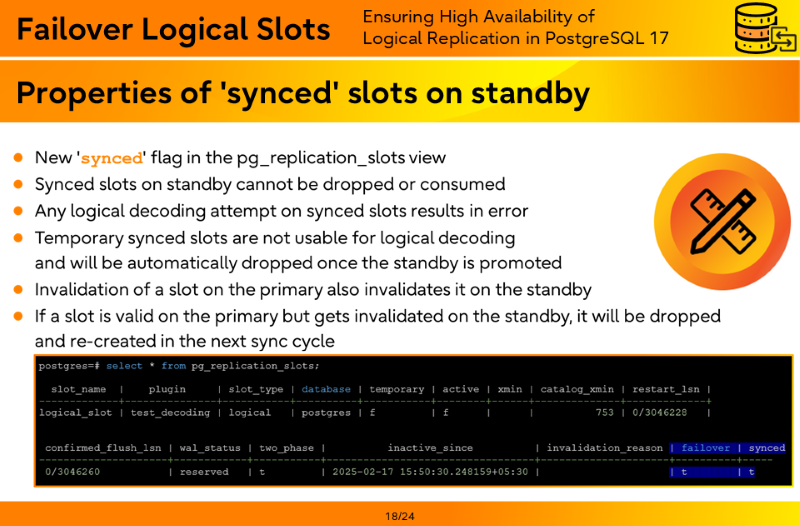

Properties of 'synced' slots on standby

- New 'synced' flag in the pg_replication_slots view

- Synced slots on standby cannot be dropped or consumed

- Any logical decoding attempt on synced slots results in error

- Temporary synced slots are not usable for logical decoding and will be automatically dropped once the standby is promoted

- Invalidation of a slot on the primary also invalidates it on the standby

- If a slot is valid on the primary but gets invalidated on the standby, it will be dropped and re-created in the next sync cycle

postgres=# SELECT * FROM pg_replication_slots; slot_name | plugin | slot_type | database | temporary | active | xmin | catalog_xmin | restart_lsn | -------------+---------------+-----------+----------+-----------+--------+------+--------------+-------------+ logical_slot | test_decoding | logical | postgres | f | f | | 753 | 0/3046228 |

confirmed_flush_lsn | wal_status | two_phase | inactive_since | invalidation_reason | failover | synced ---------------------+------------+-----------+----------------------------------+---------------------+----------+----- 0/3046260 | reserved | t | 2025-02-17 15:50:30.248159+05:30 | | t | t

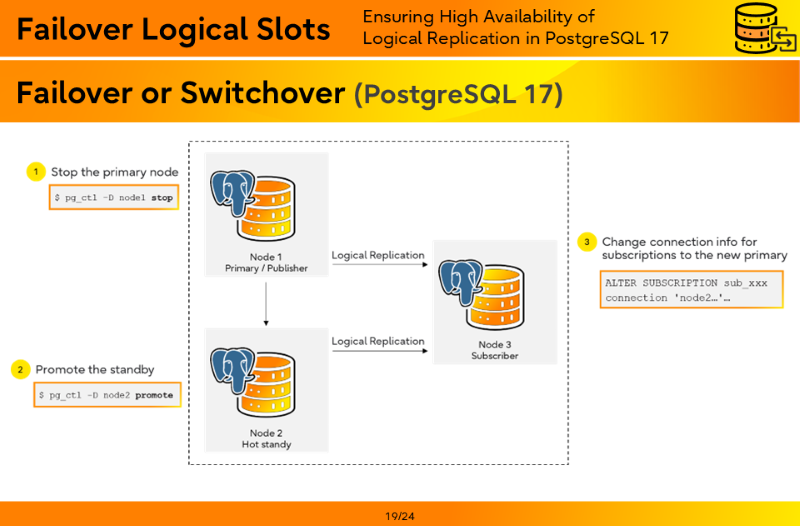

Failover or Switchover (PostgreSQL 17)

- Stop the primary node

$ pg_ctl –D node1 stop

- Promote the standby

$ pg_ctl -D node2 promote

- Change connection info for subscriptions to the new primary

ALTER SUBSCRIPTION sub_xxx connection 'node2…'…

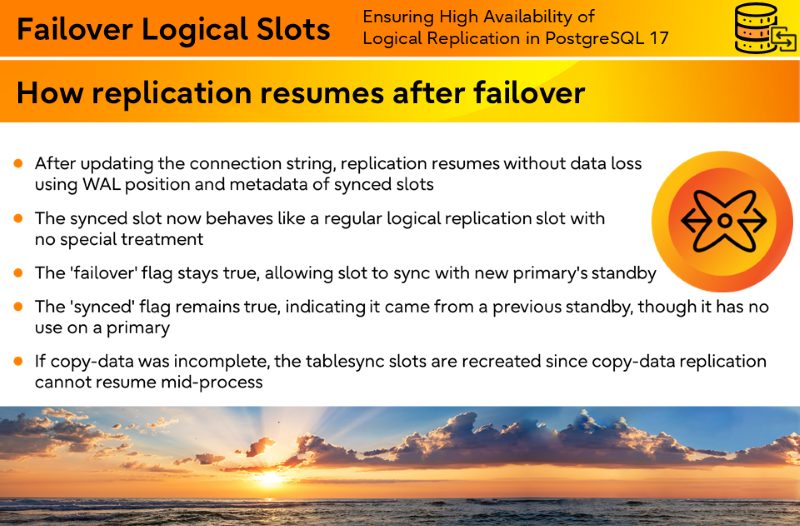

How replication resumes after failover

- After updating the connection string, replication resumes without data loss using WAL position and metadata of synced slots

- The synced slot now behaves like a regular logical replication slot with no special treatment

- The 'failover' flag stays true, allowing slot to sync with new primary's standby

- The 'synced' flag remains true, indicating it came from a previous standby, though it has no use on a primary

- If copy-data was incomplete, the tablesync slots are recreated since copy-data replication cannot resume mid-process

Limitations

- No support for cascading standby sync

- Slot synchronization is only between the primary and its direct standby

- Applies only to logical replication slots

- Physical replication slots are not supported for syncing

Summary

With PostgreSQL 17, logical replication is now more resilient and failover-ready!

- New failover property for logical slots

- Failover-ready logical slots ensure logical replication continues after failover

- Reduces downtime & manual re-syncing by preserving logical slots on standby

- Supports both manual and automatic slot synchronization for flexibility

- Ensures standby is always in sync with subscribers, minimizing data loss

Summary

- PostgreSQL documents

- https://www.postgresql.org/docs/current/logical-replication.html

- https://www.postgresql.org/docs/current/warm-standby-failover.html#WARM-STANDBY-FAILOVER

- https://www.postgresql.org/docs/current/logical-replication-failover.html#LOGICAL-REPLICATION-FAILOVER

- https://www.postgresql.org/docs/current/logicaldecoding-explanation.html#LOGICALDECODING-REPLICATION-SLOTS-SYNCHRONIZATION

- Feature thread

Conclusion

It's evident that PostgreSQL 17 marks a significant advancement in the realm of High Availability for logical replication. The introduction of failover slots addresses a longstanding challenge—ensuring seamless replication continuity during failover events.

By enabling automatic synchronization of logical replication slots between primary and standby servers, PostgreSQL 17 reduces the complexity and potential downtime associated with manual interventions.

Throughout the session, we delved into the mechanics of logical replication, the evolution of replication slots, and the intricacies of configuring failover slots. The discussions underscored the importance of understanding both the theoretical and practical aspects to effectively implement these features in real-world scenarios.

Sharing these insights and the accompanying slides has been a rewarding experience. I hope they serve as a valuable resource for those looking to enhance their PostgreSQL setups with robust high-availability solutions. As always, the PostgreSQL community continues to inspire with its commitment to innovation and resilience.